The rapid evolution of AI workloads has ushered in a new era of computational demands, pushing traditional infrastructure models to their limits. Hyperconverged systems, once hailed as the silver bullet for IT simplification, now face an unexpected challenge: AI-driven compute fragmentation. This phenomenon is reshaping how enterprises approach their data center strategies, forcing a reevaluation of resource allocation in an increasingly AI-centric world.

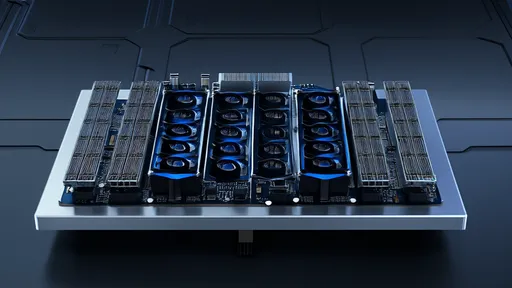

At the heart of this transformation lies the fundamental mismatch between hyperconverged infrastructure's (HCI) elegant uniformity and AI's voracious, unpredictable appetite for specialized compute resources. Where HCI promised tidy consolidation of compute, storage, and networking into standardized nodes, modern AI workloads demand precisely tuned accelerators - GPUs, TPUs, and increasingly, domain-specific architectures that resist neat packaging into homogeneous building blocks.

The fragmentation manifests in several dimensions. Temporal fragmentation occurs when bursty AI training jobs create resource troughs and peaks that conventional hyperconverged systems struggle to absorb. Spatial fragmentation emerges as different AI models require dramatically different ratios of compute to memory to storage. Perhaps most disruptive is the architectural fragmentation, where a single AI pipeline might involve traditional CPUs for data preprocessing, GPUs for model training, and neuromorphic chips for inference - all within workflows that demand millisecond-latency communication between these disparate elements.

This isn't merely a technical challenge; it's fundamentally altering the economics of enterprise computing. The traditional HCI value proposition of predictable scaling and simplified management breaks down when facing AI's heterogeneous requirements. Data center operators report stranded resources - GPU cycles sitting idle while adjacent nodes starve for memory bandwidth, or storage arrays twiddling thumbs waiting for preprocessing to complete. The very consolidation that made HCI attractive becomes its Achilles' heel in AI environments.

Emerging solutions are taking shape at the intersection of hardware and software innovation. Some vendors are developing "heterogeneous hyperconverged" systems that maintain HCI's management simplicity while accommodating diverse accelerator types. These systems employ sophisticated resource disaggregation techniques, allowing compute, memory, and storage resources to be dynamically composed based on workload requirements. Advanced scheduling algorithms coupled with high-speed interconnects like CXL (Compute Express Link) enable these resources to be efficiently shared across nodes, mitigating fragmentation effects.

The software layer is undergoing equally radical transformation. New abstraction frameworks are emerging to present a unified view of fragmented resources to AI workloads. These systems track resource utilization at unprecedented granularity, enabling micro-scheduling decisions that can pack AI jobs into available resource fragments with near-perfect efficiency. Some solutions borrow concepts from telecommunications' wavelength division multiplexing, treating different resource types as parallel channels that can be independently allocated and combined as needed.

Industry observers note this evolution mirrors earlier transitions in computing history, where each new workload paradigm eventually demanded its own infrastructure optimization. Just as virtualization drove the original convergence wave that birthed HCI, AI's unique characteristics are now driving its fragmentation. The difference this time lies in the pace of change - where previous infrastructure evolutions unfolded over years, AI's breakneck development compresses adaptation cycles into quarters.

Looking ahead, the most successful approaches will likely blend elements of convergence and fragmentation. Early adopters are experimenting with "strategically fragmented" architectures that maintain HCI's operational simplicity where possible while embracing controlled heterogeneity where necessary. This balanced approach acknowledges that while AI workloads demand specialized resources, the broader IT ecosystem still benefits from consolidation and standardization.

The implications extend far beyond infrastructure design. This shift is reshaping organizational structures, with AI operations teams increasingly collaborating with traditional IT groups to navigate the new landscape. Financial models are evolving too, as capital expenditures give way to more flexible consumption models that can accommodate rapid changes in AI resource requirements. Even sustainability calculations are being rewritten, as efficient resource utilization becomes both an economic and environmental imperative in fragmented AI environments.

As enterprises navigate this transition, one truth becomes increasingly clear: the future belongs to those who can master the paradox of simultaneous consolidation and fragmentation. The next generation of hyperconverged systems won't seek to eliminate AI-driven fragmentation, but rather to harness it - creating infrastructures that are both precisely tailored and effortlessly scalable in the face of AI's relentless evolution.

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025