The concept of cross-device context-aware latency is rapidly gaining traction in the tech industry as seamless connectivity becomes a non-negotiable expectation for modern users. Unlike traditional latency issues that focus solely on network performance, this emerging challenge encompasses the synchronization delays between multiple devices operating within an interconnected ecosystem. From smart homes to wearable tech and industrial IoT, the frictionless transfer of contextual data across devices is now a critical component of user experience.

Understanding the Core Challenge

At its heart, cross-device context-aware latency refers to the delay occurring when contextual information—such as location, activity, or environmental data—fails to synchronize in real-time across multiple devices. Imagine pausing a movie on your living room TV only to find your tablet takes several seconds to reflect this action. Or consider a fitness tracker that doesn’t immediately update your smartphone with your latest workout metrics. These gaps, though seemingly minor, disrupt the fluidity of digital interactions and erode trust in interconnected systems.

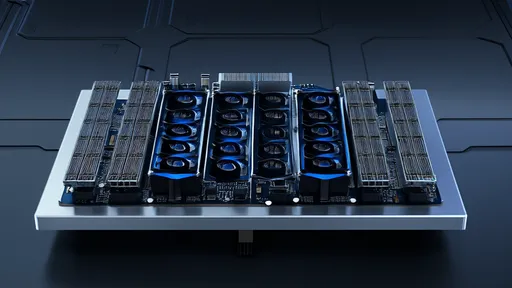

The problem is compounded by the diversity of hardware and software stacks involved. Each device operates with its own processing capabilities, power constraints, and communication protocols. A smartwatch might prioritize energy efficiency over instantaneous data transmission, while a cloud server has no such limitations. Bridging these disparities without introducing perceptible lag requires a delicate balance of technical optimizations.

The Invisible Architecture Behind Seamless Experiences

Beneath the surface of smooth cross-device interactions lies a complex web of technologies working in concert. Edge computing has emerged as a pivotal solution, processing data closer to the source to reduce round-trip times to centralized servers. Meanwhile, advancements in federated learning allow devices to collaboratively improve their contextual awareness without constantly exchanging raw data, thus minimizing bandwidth consumption.

Protocols like Matter (formerly Project CHIP) are attempting to standardize communication between disparate smart home devices, while WebRTC implementations enable real-time data streaming across browsers and applications. However, these technologies must contend with the physical limitations of wireless communication—radio interference, signal attenuation, and the immutable speed of light all impose hard boundaries on latency reduction.

The Human Factor in Latency Perception

Interestingly, not all latency is created equal in the human perception. Studies in human-computer interaction reveal that users can tolerate slightly longer delays for certain types of contextual updates compared to direct interactions. For instance, a smart thermostat adjusting based on occupancy might have more leeway than a voice assistant's response time. This nuance allows engineers to prioritize resources where they matter most.

Psychologically, expectation plays a crucial role. Users accustomed to near-instant smartphone responses grow impatient with smart home delays, even if the underlying technology is vastly different. This phenomenon, sometimes called the "smartphone latency expectation spillover," pushes developers to achieve impossible-seeming synchronization across fundamentally asymmetric systems.

Emerging Solutions and Their Trade-offs

Several innovative approaches are tackling cross-device latency from different angles. Predictive prefetching uses machine learning to anticipate user actions and preemptively sync relevant data across likely next-use devices. While effective, this method risks increased energy consumption and potential privacy concerns from aggressive data collection.

Another promising direction involves context-aware quality of service (QoS) prioritization, where network resources dynamically allocate based on the criticality of specific data flows. A health monitoring system might receive priority over a background music sync, for example. Implementing such systems requires deep integration across network layers and device operating systems—a challenge in fragmented ecosystems.

The development of specialized low-latency wireless protocols like Ultra-Wideband (UWB) for precise short-range communication shows particular promise for device handoff scenarios. However, the need for new hardware adoption creates a chicken-and-egg problem for widespread implementation.

Measuring What Matters in Context-Aware Systems

Traditional latency metrics often fall short in capturing the true user experience in cross-device scenarios. New evaluation frameworks are emerging that consider contextual completeness—the point at which all relevant devices have synchronized to a new state—rather than just transmission delays. These holistic measures account for the fact that users perceive the system as a whole, not as individual components.

Field studies reveal that consistency often matters more than raw speed. Users prefer predictable, slightly longer delays over variable response times that create uncertainty. This insight drives the development of synchronization algorithms that prioritize determinism over occasional faster performance.

The Road Ahead for Cross-Device Harmony

As we move toward increasingly ambient computing environments, solving context-aware latency will only grow in importance. The next generation of spatial computing and augmented reality applications will demand even tighter synchronization between wearable displays, environmental sensors, and cloud services. Failures in this domain won't just cause frustration—they may literally break the illusion of digital objects existing persistently in physical space.

Industry consortia and standards bodies are beginning to address these challenges, but the rapid pace of innovation often outstrips formal standardization. In the interim, developers must navigate a landscape of proprietary solutions and workarounds, all while maintaining the illusion of perfect synchronization that users now expect.

Ultimately, conquering cross-device context-aware latency isn't just about shaving milliseconds off transmission times. It's about crafting the technological equivalent of a well-rehearsed orchestra—where diverse instruments (devices) play in perfect harmony, creating an experience greater than the sum of its parts. The companies that master this symphony will define the next era of connected experiences.

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025